Over 150 publishers (society, commercial, and university press), librarians, funders, service providers, university administrators, faculty, and researchers attended the virtual CHORUS Forum on Open Access Policies and Compliance in a Global Context on 30 July 2020. CHORUS Chairman Alix Vance (AIP Publishing CEO) kicked off the day by welcoming participants and introducing speakers.

Over 150 publishers (society, commercial, and university press), librarians, funders, service providers, university administrators, faculty, and researchers attended the virtual CHORUS Forum on Open Access Policies and Compliance in a Global Context on 30 July 2020. CHORUS Chairman Alix Vance (AIP Publishing CEO) kicked off the day by welcoming participants and introducing speakers.

Here’s a summary of the program’s presentations:

The Global Landscape – Beyond the Wave

Session One’s speakers provided unique perspectives and insights into changing global compliance mandates for open and public access on government-funded research, focusing first on the big picture and then on the EU, UK, and Japan. To provide a framework for evaluating the progression of open access over the last 20-odd years, moderator David Crotty (Oxford University Press Journals Policy Editorial Director) drew on competing theories of evolution: the first is gradualism, contentiously called “evolution by creeps” by the other faction, and it says that evolution is slow steady change continuously over time. The other is punctuated equilibria, termed “evolution by jerks” by gradualists, which says that species are stable until a big event in the environment causes a sudden period of rapid change. According to David, after relatively gradual growth over a long period, OA is now experiencing much more rapid (and bumpy) change.

Dan Pollock (Delta Think Chief Digital Officer) tapped into Delta Think’s OA Data & Analytics Tool for metrics to gauge the uptake of open access, to look at how different perspectives yield different results, and examine policies and mandates — the key influencer of OA uptake.

Pointing out that the term “open access” means different things to different people, Dan noted that the meaning of the numbers cited by sources depends on the definitions they use. He cited a recent OA estimate that 53% of articles are published OA, but noted that this calculation included “formally Open Access” content (that is both free to read and free to reuse), and “Public Access” content (that is free to read, but not to reuse). The OA portion of published articles dropped to just over 40% using the more formal definition.

Various factors affect the proportion of OA. As a rule, the broader the scope and geography, the higher the proportion of OA. The more focused the scope — especially on major publishers and wealthier nations — the lower the OA uptake. Some disciplines show a greater uptake of OA than others.

One constant is that the proportion of OA has been growing over time – most likely the result of growing mandates and policies. There are currently about 1,000 policies globally, more than three quarters of which are registered by universities / research institutes, and the rest by funders and initiatives like Coalition S. Europe and North America lead the regional charge, the US and the UK at the national level. The data generally supports a relationship between the policies and the actual use of OA. The UK, European, and North American countries have above median OA uptake, while Asian countries have a lower than median usage. Brazil is on the higher side, due to the SciELO program.

Turning to Plan S, some journals are disproportionately affected by mandates. One study suggested that nearly a third of the articles in some high-impact journals will have to be made Plan S compliant, as its mandates roll out. Meanwhile, there is a multiplier effect of multi-author papers. If just one author in a multi-author paper is funded through a Plan S funder, then the paper must comply with its requirements. So, even though Plan S will directly cover less than 1% of global R&D spending, it will influence between 4%-5% of all articles published.

Alicia Wise (Information Power Director) explained that to understand OA in Europe, one has to look at funder policies, and how universities, libraries, and researchers are thinking about them. Europe provides robust government funding for both research and university infrastructure. Library budgets are influenced by the amount of research money available. University libraries and library consortia provide funding for publications access, and increasingly, publishing services. European funders are paying for OA through grants to individual researchers, universities, libraries, and research offices. Funders and university libraries are increasingly aligned to drive OA, constrain costs, and achieve value for research investment.

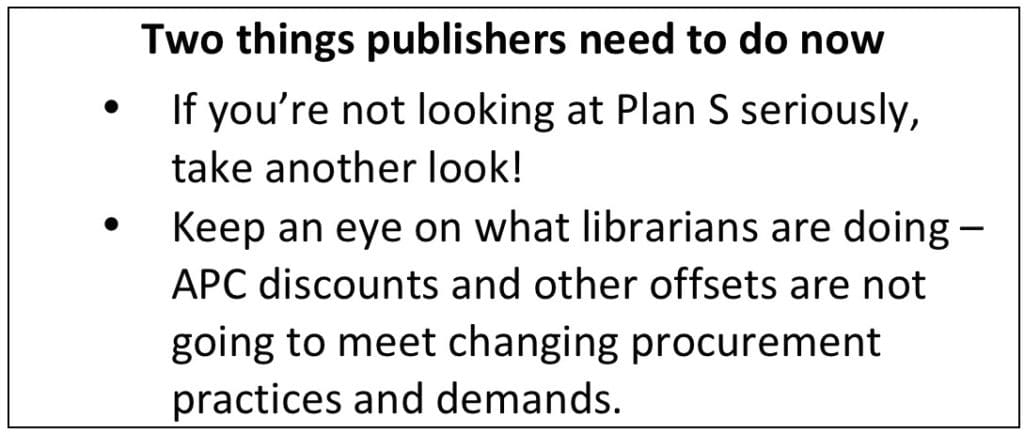

The drivers for change operate differently within countries and across the continent. Alicia provided a close look at OA / open science policies and practices at a national, regional, and local level in France, Germany, and the UK. She also discussed Coalition S, a multi-national coalition of funders centered in the EU that has put forth Plan S, which is committed to pushing the boundaries on what is comfortable for everyone. Their zeal  for some specific rapid changes recently led one important funder – the European Research Council – to withdraw its support for Plan S, despite being a strong Open Access proponent. But Coalition S is driving innovation and increasing the amount of engagement and the pace of movement towards OA with its constant stream of activities and requirements. Beginning next year, Plan S expects publishers to have hybrid journals on a transformative path in order for researchers to continue getting funding from Coalition S members. Plan S has a broader impact than what their grant recipients do; it has served as a catalyst for change in library and university procurement activity.

for some specific rapid changes recently led one important funder – the European Research Council – to withdraw its support for Plan S, despite being a strong Open Access proponent. But Coalition S is driving innovation and increasing the amount of engagement and the pace of movement towards OA with its constant stream of activities and requirements. Beginning next year, Plan S expects publishers to have hybrid journals on a transformative path in order for researchers to continue getting funding from Coalition S members. Plan S has a broader impact than what their grant recipients do; it has served as a catalyst for change in library and university procurement activity.

Yasushi Ogasaka (Director, Department of R&D for Future Creation, Japan Science and Technology Agency) described the open science policy landscape in Japan and provided a chronological overview of activities regarding open-science policy at various bodies. One of Japan’s main funding agencies, JST also operates a journal platform and database. The agency has been a pathfinder for open science in Japan. While much of the focus has been on data, JST is also forging a path for open access to content, and Dr. Ogasaka provided information on those activities in his presentation.

In Japan, there is a master plan for research policy called the Science and Technology Basic Plan, which cascades down from the Cabinet level to the various ministries, agencies, and councils. It is renewed every five years – the current plan began in 2016. There was only a brief OA mention previously, but the 2016 Basic Plan definitively promotes open science with new targets incorporated by the Cabinet-level group, for establishing a system for the promotion of open science, recognizing its importance for expanding the reach and impact of research, and managing its roll out. This prompted the main funding agencies to establish open science policies (2017) and issue a detailed Integrated Implementation Strategy (2018). Since then, considerable progress has been made on goals that were set to establish a national data infrastructure (by 2020), and data management policies for the 24 National Institutes (2020) and 14 funding bodies (2021).

Community engagement was introduced to the process through the Research Data Utilization Forum working groups (established 2016), and the annual Japan Open Science Summit (inaugurated 2018). Last year’s Summit drew 560 participants; this year’s program was cancelled due to the pandemic, but 2021 plans are underway. Community stakeholders now have a role in policy making as their recommendations are influencing the next round of plans.

Despite the OA policies at Japan’s major universities, institutes, funders, and ministries, and JST, OA mandate for its grantees, policy discussion about OA is not very active to date. That should ramp up as the result of a recent ministry-level discussion about journals subscription costs, APC support, publication of research output of publicly funded research, and evaluation of researchers. The 2021 Basic Plan is likely to have increased emphasis on many aspects of open science and MEXT will be addressing these issues.

In addition to taking the lead on open science, open access, and data management policies in Japan, JST collaborated with CHORUS on a customized mechanism to monitor compliance. The CHORUS Dashboard allows JST to promote OA by monitoring the publication and OA status of JST-funded articles, and by making available metadata and information that would otherwise not be easily accessible.

Listen to the Q&A on researcher burden solutions, OA for Japanese publications, Plan S, preprints, and transformative agreements.

Challenges and Progress for Academia in Accelerating Public Access to Research Data

![]() Moderator Howard Ratner (CHORUS Executive Director) introduced the session on the problems university research offices face in complying with data-sharing requirements, why public access to research data is important to scholarship, and what actions that are being taken to accelerate its implementation in academia.

Moderator Howard Ratner (CHORUS Executive Director) introduced the session on the problems university research offices face in complying with data-sharing requirements, why public access to research data is important to scholarship, and what actions that are being taken to accelerate its implementation in academia.

Sarah Nusser’s (Iowa State University Professor of Statistics and former Vice President for Research) experiences in Iowa State’s research office and with the AAU/APLU Accelerating Public Access to Research Data Project give her a unique perspective. She emphasized that success rests on “the primary actors in this drama,” the academic researchers who produce the data and need stronger incentives and rewards to taken on the work that is required to share data. There is a pressing need to make it easier to plan, document, and prepare data for sharing. There is an equally pressing need to minimize the costs and burdens for their institutions to help them to do so.

Both researchers and institutions are already juggling a lot of changes, including the move to more team-based, interdisciplinary scholarship, greater transparency and openness in research protocols, increased focus on research integrity, and heightened emphasis on managing the balance between science openness and security. Understandably, the responses to OA mandates by some researchers and administrators are not uniformly positive, due to the extra work and lack of clarity on the benefits and motivations for taking on the extra compliance burden. It’s important for them to understand that the Internet has made it much easier to collaborate and share, causing a significant shift in how research is practiced, but the fundamentals of scientific inquiry have not changed. The long-standing traditions of exchanging ideas and scrutinizing findings remain fundamental to demonstrating the rigor of the work and earning the public trust, even if the way we are sharing information changes.

Sarah pointed out that there will be huge payoffs from transitioning to open science: more rapid and widespread release of findings and associated data can accelerate knowledge dissemination, extend its application to new settings, and improve rigor and information quality. There’s the potential for researchers and institutions to be more widely credited and rewarded for their impact and contribution. There can be more bang for the buck for sponsors, who benefit from discoveries arising sooner.

Different stakeholders have different visions for the future, and incomplete knowledge of the full perspectives of all of the other actors. Data sharing is a relatively recent practice, especially compared to public access to publications, and current practices are highly variable across disciplines and funder requirements. The community has to work collectively to change the culture of how research is conducted and rewarded.

Two organizations — the Association of American Universities and the Association of American Public Land Grant Universities — banded together in 2017 to help foster change in academia around open data, with support from the NSF and NIH.

It was fairly easy to come up with shared goals: minimizing burden for all, prioritizing data quality, evaluating published data, weighing the benefits of sharing data in relation to the costs of creating access and preserving the data after the fact, releasing clearer guidance on and harmonizing compliance requirements, monitoring, and enforcement wherever possible. The next step was to galvanize the implementation in academia, using a workshop format to bring together teams from a group of university research offices, libraries, faculty, information technology departments, and policy that have successfully driven the initiative forward. Challenges remain: Establishing effective institutional support structures, continued aversion to sharing data, lack of awareness about available campus services, and lingering concerns about the value of sharing data. Fortunately, tools are beginning to emerge to help researchers to prepare, plan, and document data for sharing and potentially ease, the time-consuming burdens that stand in the way of progress. The focus is now to reach out to more campuses, develop a practical guide for widespread implementation, and come up with a long-term strategy for advocacy and facilitation – work that should wrap up in the next year. Sarah noted that events like this one CHORUS is hosting helps to understand each other’s perspectives and integrate them so progress can be made going forward.

Listen to the Q&A on dataset citations, affiliation metadata, plausibility of harmonization, and the need for data specialists

The State of Public Access: The US Funder Perspective

![]()

Moderator Brooks Hanson (American Geophysical Union Executive Vice-President, Science) began the session on the current and expected state of play for open science policy by welcoming four U.S. government funding agency representatives. These funders have and are playing an important role in the evolution of open research data and open access to publications, collaborating with stakeholders across the research communications landscape. Brooks noted that the long history of cooperative experimentation has produced key initiatives, for example, arXiv was initially developed by the U.S. Department of Energy. Lessons learned along the way helped to shape PubMed Central and lay the ground for its continued growth. Other agencies, such as National Science Foundation, are providing support for data repositories across the sciences.

Kathryn Funk, MLIS, (National Institutes of Health PubMedCentral Program Manager) referencing the definitions in Dan Pollock’s earlier presentation, said outright that NIH does not have an Open Access policy – it has a Public Access policy, aligning with the definitions in Dan Pollock’s earlier presentation.

As the US’s medical research agency, NIH oversees the National Cancer Institute, NIAID, NLM, and other institutions and centers, supports an intramural research program and awards tens of thousands of research grants annually. More than 100,000 peer-reviewed papers are published each year reporting on this research. NIH has partnered with other government and private funders in the US and internationally to provide manuscript submission and public access support via PMC, and coordinate when multiple policies apply.

The NIH Public Access policy requires researchers to deposit their peer-reviewed papers to PMC, where they are made accessible to the public within 12 months of publication, archived in an interoperable, machine-readable format, and made available for reuse and text-mining (according to their licenses). More than one million papers with NIH support are now publicly accessible via PMC (90% compliance). That the papers have been accessed more than a billion times demonstrates the policy’s huge impact. Preprints are not subject to the policy but since 2017 NIH has been encouraging investigators to post publicly preprints and other interim research products with a CC-BY license, when appropriate.

NIH requirements are consistent with the FAIR data-sharing principles (findable, accessible, interoperable, and reusable), but the policy predates this framework. The question now is how can the NIH extend its framework to cover data? A 2019 proposed data management and sharing policy would expand on the 2003 policy that applies to large grants only. Data are the recorded factual materials necessary to validate and replicate research findings, regardless of whether they are used to support scholarly publications. However, because publications can provide insights about how data are being shared, the NIH is tracking the percentage of NIH-supported deposits to PMC with Data Availability Statements (DAS) and supplementary materials to gain a better understanding of how public access to publications and data overlap.

Andrea Medina-Smith (National Institute of Standards and Technology Metadata Librarian) explained that NIST is the US metrology institution, a government agency focusing on measurements, manufacturing and cybersecurity. The vast majority of NIST research is intramural, conducted by the agency’s 3,400 employees at two campuses, with only a few external awards granted. The NIST laboratories and facilities create engineering, telecommunication, transmission, disaster response, and cybersecurity standards. NIST’s Public Access partnership with PMC requires that their researchers deposit their peer-reviewed articles into PMC. Technical reports that are not peer-reviewed, and a compendium of conference abstracts / proceedings annually are archived in GPO’s govinfo repository. For data, NIST has a taxonomy to help researchers decide which public access requirements apply. That varies for machine data (no public access requirements) and standard reference datasets (must be publicly accessible, fully reviewed, and preserved). Data can be posted to secondary locations once the NIST repository has a copy.

For the small number of NIST grantees, publishing in a CHORUS member journal and having the accepted manuscript or version of record publicly accessible satisfies the requirements. If papers are published in non-CHORUS member journals, PMC helps to make them publicly accessible.

The NIST Public Access policy does not have separate requirements for data not associated with articles, although the agency encourages everyone to publish as much data as possible to enhance discoverability and be more consistent with FAIR principles.

Beth Plale (National Science Foundation Science Advisor for Public Access) reported that about 95% of NSF’s $8Billion annual budget funds extramural research across the sciences. The agency instituted a Public Access plan in 2015 but its data management plan (DMP) requirement has been in place since 2011, and with it DMP review became part of the NSF peer review process. Because NSF covers the spectrum of scientific disciplines, peer review of DMPs acknowledges that the communities know how best to assess plans for data management.

NSF requires grantees to deposit their peer-reviewed paper (accepted manuscript) in NSF-PAR, its public access repository; the content becomes publicly accessible after a 12-month embargo. Since 2016, about 50,000 papers have been deposited, and the rate of deposit is steadily increasing.

NSF is expanding NSF-PAR to capture metadata about research products beyond journals and conference proceedings, starting with data in support of publications. Preprints do not fall under the current plan but are included in annual reporting. Reporting will be voluntary to start, and the agency doesn’t intend for it to become a repository for the data itself, but is instead relying on existing repositories to make the actual datasets publicly available. This approach, which is consistent with models for findability and accessibility being discussed by the National Science and Technology Council Interagency Working Group on Open Science, should enable greater discovery, access, and use of research products, help with compliance and accountability, allow the researcher to have more control, and address issues around the shelf life of data. Employing the different solutions available across the research data landscape can provide greater longevity, and expertise and a framework to address evolving needs.

Carly Robinson (US Department of Energy, OSTI Assistant Director for Information Products and Services) explained that the US Department of Energy funds approximately $13 billion annually in R&D funding. The funding flows out to USDOE’s 17 national laboratories and to grantees at universities and other institutions.

The Public Access Plan that USDOE released in July 2014 addressed both publication and data requirements. Since the fall of 2014, researchers must deposit the metadata and a link to the accepted manuscript (of the full-text itself) in their institution’s repository to be made available through USDOE PAGES. Preprints do not fall under the current plan but are being explored. All research proposals selected for funding must have a data management plan, describing how the research data was generated, how it will be shared and preserved, and be made publicly accessible at the time of publication.

The DOE National Laboratories compliance with the public access to publications requirements continues to increase yearly with the current median rate ~75%. There is still more work to be done with grantees to make sure that the requirements and policies are fully understood. The requirement of having a data management plan with funded R&D proposals is going well. There is an ongoing evaluation of current DMP guidance related to what’s happening more broadly in the federal government.

USDOE provides persistent identifier (PID) services assigning DOIs to data, software, and technical reports. They will be launching new services this fall assigning DOIs to awards, conference posters, and presentations. USDOE leads the US Government ORCID Consortium. ORCID is currently working with Research Organization IDs (RoR IDs). The end goal is to use PIDs to create connections through the research lifecycle, connecting funding to researchers to organizations to research outputs, to show the impact of USDOE funding.

Listen to the Panel Discussion and Q&A on the 2020 OSTP RFIs, preprints, plausibility of harmonization, and the need for data specialists, federal investment in sustainable infrastructure, and the one thing each agency would ask of publishers.

Closing Remarks

To wrap up, Alix Vance thanked all of the presenters and attendees for their participation in the Forum. She encouraged participants to register for the upcoming free webinar, Towards a US Research Data Framework, co-organized by STM, the Center for Open Science, and CHORUS on 17 September 2020 event (11:00 AM to noon, EDT). Don’t miss the discussion of the merits of making research data more open and FAIR, moderated by Dr. Robert J. Hanisch (NIST), and featuring Shelly Stall (AGU), Terry Law (Environmental Molecular Sciences Laboratory), and Jonathan Peters (Virginia Tech).