At last month’s CHORUS Forum: 12 Best Practices for Research Data Sharing speakers addressed the Joint Statement on Research Data Sharing by STM, DataCite and Crossref. The forum was moderated by Howard Ratner, Executive Director, CHORUS and sponsored by AIP Publishing, Association of American Publishers, Crossref, GeoScienceWorld, and STM.

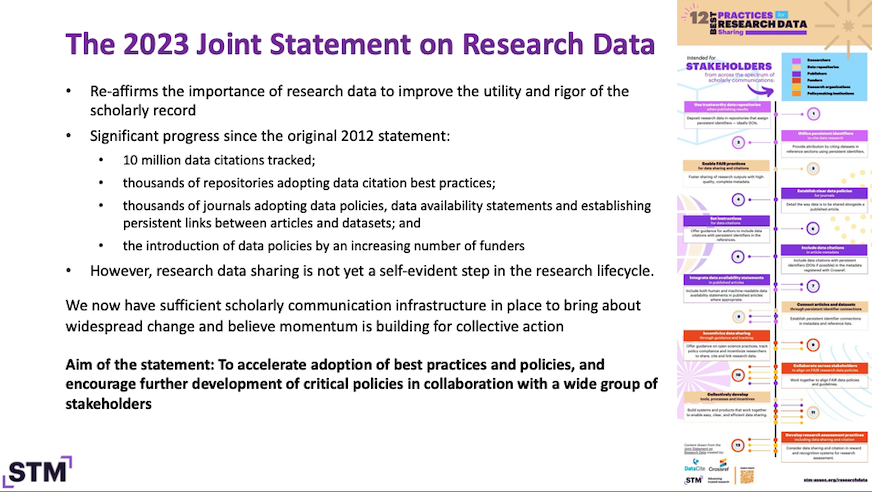

Hylke Koers, Chief Information Officer, STM Solutions, ignited the discussions by emphasizing the ongoing pursuit of elevating the scholarly record’s effectiveness and integrity through collaborative efforts. While recognizing the challenges in research data sharing, STM remains steadfast in its commitment to fostering the sharing of research data and that it is crucial to advance science and research. Starting with the Brussels declaration in 2007 to the Joint Statement in 2023, STM has collaborated with stakeholders to support the advancements of the statements with the approach to advance transparency, reproducibility, and providing opportunities for scientific discovery and collaboration.

https://www.stm-assoc.org/reearch-data-programs/bestpractices/

Highlighting the tangible progress achieved, Hylke noted an increase in journals with data policies with data availability statements and exposure of data citations for further utilization. This underscores the importance of research data to improve the utility and the rigor of the scholarly record. Noting the journey is ongoing, a board has been put in place to monitor progress, initiate further actions, and further interventions.

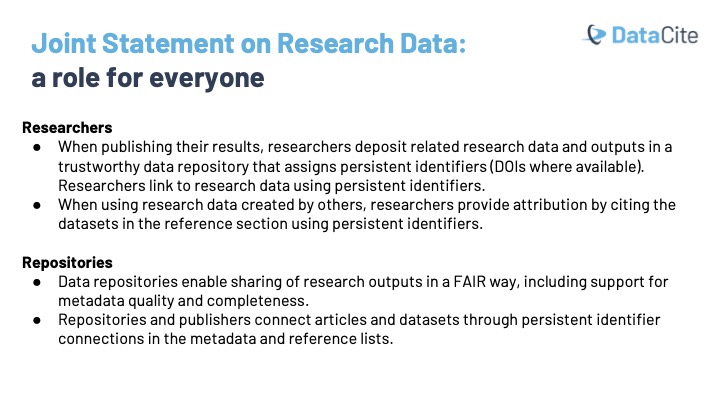

Helena Cousijn, Community Engagement Director, DataCite, highlighted the collective responsibility stretching from researchers to repositories. When publishing results, it is important for researchers to deposit data and other related outputs in repositories that assign persistent identifiers. If they publish an article based on the data, they should cite the dataset in the article, but also add the link to the article to the dataset metadata.

She also emphasized the pivotal role of repositories to ensure the infrastructure is in place to share data, capture data citations and link data to related outputs. While citations in articles form one avenue for establishing a data citation, repositories also play a pivotal role because the relationship between an article and a dataset can be established by the repository through the dataset metadata. When registering a DOI for the dataset, the repository can indicate which related identifiers exist, thereby making the information about the link available to the broader community.

Helena stressed that it’s very important to add the citation information wherever possible, through either the dataset metadata and article metadata so that as much information as possible about citations is present. This information is visualized in DataCite Commons and through the Data Citation Corpus.

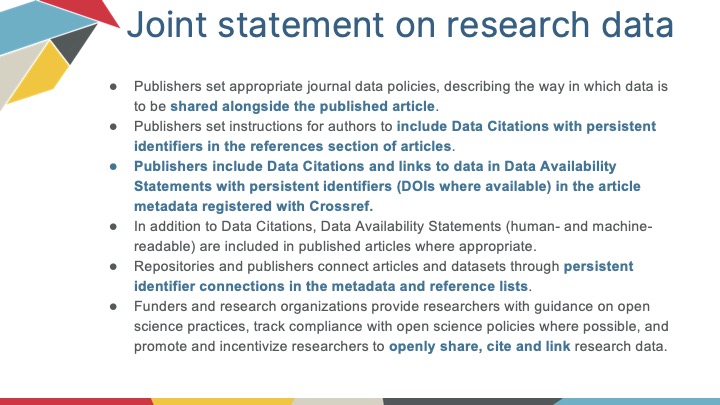

Patricia Feeney, Head of Metadata, Crossref, shared that Crossref has promoted data citations aiming to increase the citation visibility in the metadata. This effort involves bringing together disparate pieces of the scholarly record, including, but not limited to relationships, exposing internal metadata publicly, and enabling multi-party assertions. With the goal to create a reusable and open network of relationships connecting research organizations, people, things, and actions. Creating a scholarly record that the global community can build on forever for the benefit of society.

Emphasizing the significance of details, the primary objective is to ensure data availability through identifiable data availability statements, following basic citation best practices, utilizing persistent identifiers like DOIs, and by registering the appropriate metadata with Crossref. Patricia highlighted the challenges publishers face. The details are important, but overall, the call is to make data availability statements identifiable, findable by following basic citation best practices, using persistent identifiers like DOIs, and by registering the appropriate metadata with Crossref. Patricia said, “This, of course, I think is the hard part for publishers as this requires publishers to collect and record data as citations to send them to Crossref. They have to have them in the first place, which was not common practice years ago, but it’s becoming increasingly so and it’s absolutely necessary that those efforts continue.”

statements, following basic citation best practices, utilizing persistent identifiers like DOIs, and by registering the appropriate metadata with Crossref. Patricia highlighted the challenges publishers face. The details are important, but overall, the call is to make data availability statements identifiable, findable by following basic citation best practices, using persistent identifiers like DOIs, and by registering the appropriate metadata with Crossref. Patricia said, “This, of course, I think is the hard part for publishers as this requires publishers to collect and record data as citations to send them to Crossref. They have to have them in the first place, which was not common practice years ago, but it’s becoming increasingly so and it’s absolutely necessary that those efforts continue.”

You can find more at the Crossref Community Forum: https://community.crossref.org/t/delivering-data-citations/3769

Three endorses of the joint statement spoke of why these are so important and what they are doing to make data sharing possible within their organizations.

Katherine Eve, Policy Directory for Open Science, Elsevier, stated that Elsevier has a long history of supporting research data sharing. As a co-founder of Force 11, Elsevier played a role in developing the FAIR principles for data sharing. The Force 11 Data Citation principles are implemented across Elsevier journals meaning authors are encouraged to include data citations with DOIs as part of their reference list, and Elsevier’s production and publication systems can process these.

Elsevier empowers and enables researchers to store, share, discover and effectively reuse research data in a number of ways and is continually looking at ways to enhance systems and workflows to maximize data sharing. In line with journal policies, during the submission process, authors are prompted to share links to their datasets stored in a repository of their choice and provide data availability statements (DASs) which are human and machinery readable. Elsevier is experimenting with refining DAS options and the order in which they appear on journals with the goal of promoting greater data sharing unless there is truly a compelling reason not to. Elsevier stressed the goal shouldn’t just be about achieving data sharing, but making sure that the data shared is high quality and reusable. Members of the Elsevier research integrity and publication ethics team are therefore trained in various analyses of data validity, and are involved in testing and developing targeted automated checks. Peer review checks, particularly for scientific reasonableness, remain absolutely critical.

Katie explained that journals by their nature operate far along in the research process and cannot drive this change alone, which is why Elsevier particularly welcomes the joint statement’s call for collective action across stakeholders in the research community. “Partnership across stakeholders is essential to encouraging effective research data sharing practices throughout the research lifecycle,” Katie said.

Daniel Noesgaard, Science Communications Coordinator, Global Biodiversity Information Facility (GBIF) stated that GBIF is funded by the governments of our country’s participants, and currently stores more than 100,000 datasets from around 2200 publishers. In total, more than 2.6 billion species occurrences are available through GBIF. These records are downloaded at a rate of more than 160 billion per month, and they have been used in more than 10,000 peer reviewed journal articles. GBIF assigns DOIs to all the datasets that GBIF indexes but also assigns DOIs to queries, resulting in aggregated downloads across dozens, hundreds, if not 1000s of different occurrence datasets. GBIF follows the Darwin Core Standard, which is a community developed biodiversity data standard governed and ratified by the biodiversity information standards body.

Daniel mentioned that in 2017, GBIF started a campaign directed at authors of papers that use GBIF data, but fail to cite them using a DOI. And although it was a fairly intense effort it seems to have paid off as they are seeing DOI citing GBIF data in just over 60% of the papers logged. With the spirit of this joint statement that GBIF fully endorses, GBIF would love to improve the situation by working together with all relevant stakeholders, in particular, journal publishers and their staff to not only ensure that instructions for citing data are good, but also to work towards automatic editorial workflows that can flag bad citations so that they may be corrected before publication. Daniel also suggested that it may be time for journal publishers to take a step further from simply encouraging and recommending to actually implementing a mandatory policy for data citations, at least for the cases where we know that they are available.

Jamie Wittenberg, Assistant Dean for Research & Innovation Strategies, University Libraries, University of Colorado Boulder (CU) provided an institutional and research library perspective on the joint statement and mentioned several ongoing projects in the library that relate to the principles in the joint statement. The library’s institutional repository CUScholar achieved Core Trust Seal certification last year, and after many years of working towards the 16 requirements for best practices.

Item 11 on the joint statement is one that University of Colorado Boulder (CU) is just embarking on to address. CU is a pilot site for the California Digital Library and Association for Research Libraries, Institute of Museum and Library Services (IMLS) funded machine actionable data management plan project. A cross campus team that includes members of the library’s research computing office and research innovation office, Laboratory for Atmospheric and Space Physics, and our Office of Data Analytics was created to develop and evaluate workflows for leveraging machine actionable research data management plans. These new workflows for establishing machine actionable data management plans will improve collaboration between all areas of the university to facilitate data, publishing and sharing, and help support our researchers and implementing those best practices.

The biggest implementation challenge for CU in these principles is item 12. Part of the challenge is that CU doesn’t really have a standardized, transparent and widely adopted framework for metrics for data that can be used for evaluation.

Event page: https://www.chorusaccess.org/events/chorus-forum-12-best-practices-for-research-data-sharing/

Please make sure to watch the recordings and let us know (info@chorusaccess.org) what you are doing to make data sharing possible within your organizations. We would like to share your feedback with the speakers and others.